Research

Topic 1: Stochastic Convex/Nonconvex Optimization with Applications in Strategic Machine Learning

Goal: To design efficient stochastic algorithms with strong theoretical guarantees, addressing various data-driven challenges.

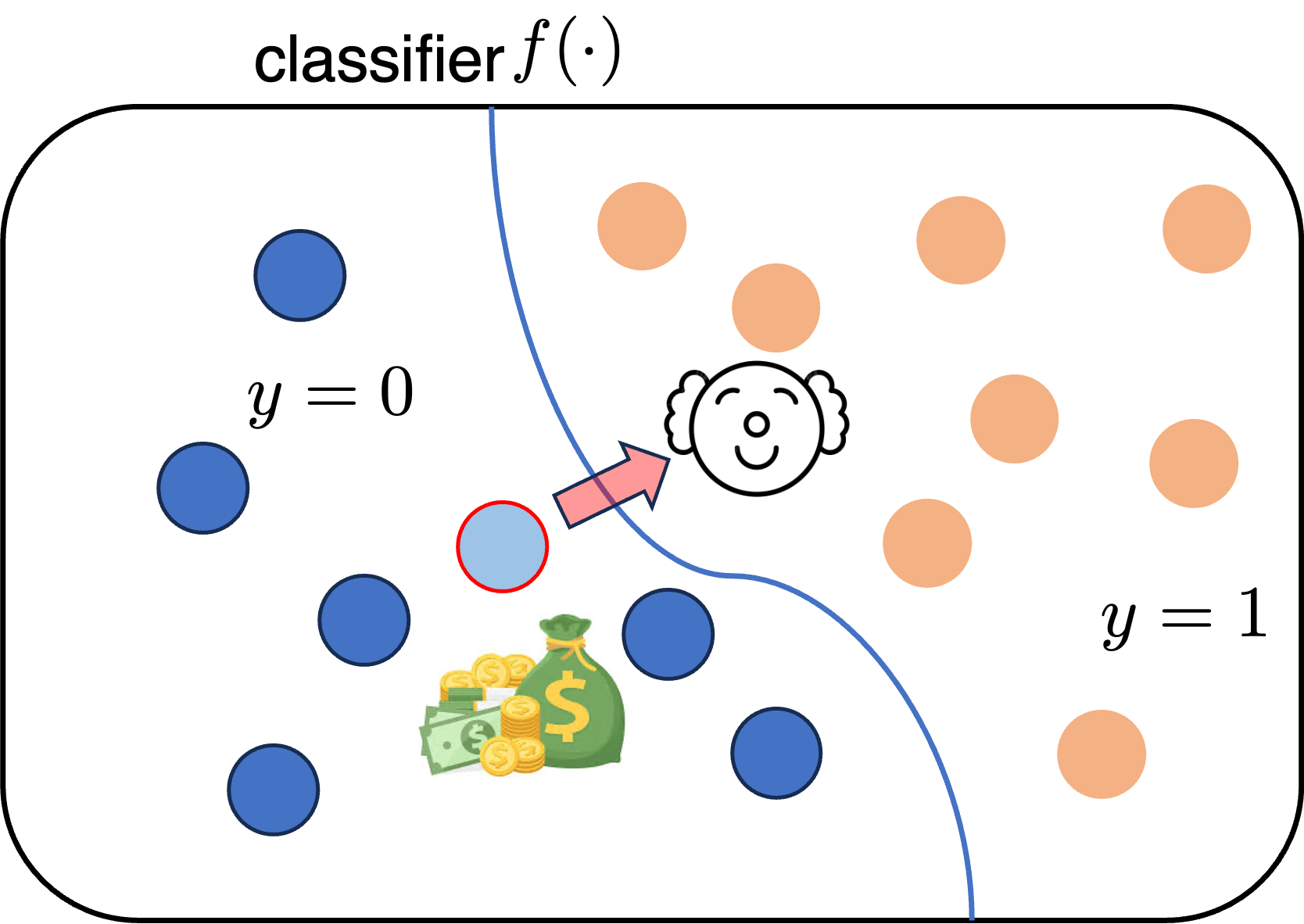

Background: Traditional supervised learning has achieved impressive results across tasks such as classification, regression, and prediction. These methods typically assume that data is static: people who generate or provide data passively participate without modifying their behavior in response to the learning process.

But what happens when human behavior interacts with learning outcomes?

Consider a hiring scenario. Job applicants might tailor their resumes or gain relevant experience based on a company’s job description. As the employer evaluates applicants, those who prepared strategically have an advantage, potentially improving their chances of being hired.

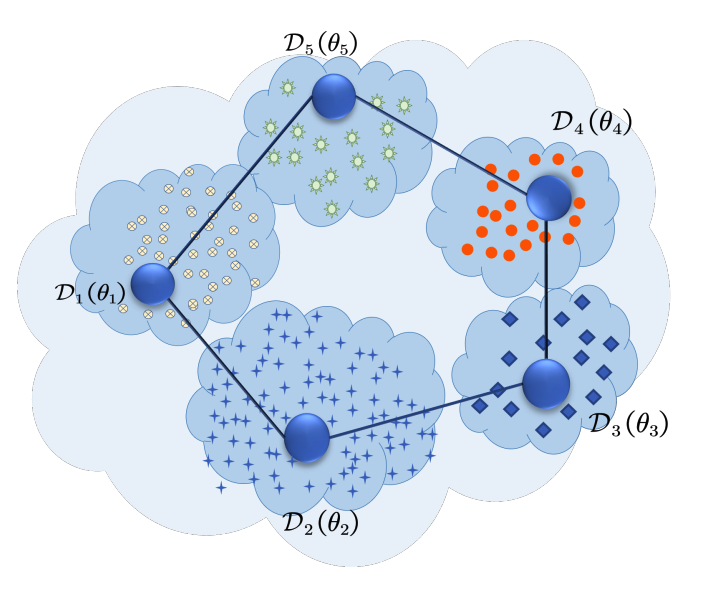

Performative Prediction provides a new framework that takes into account the ways humans adapt in response to the learning process itself. This framework models human behavior as active inputs with agency, capturing more realistic behavior “in the wild.”

Project Overview: This research sits at the intersection of machine learning and game theory. We focus on developing efficient first- and zeroth-order algorithms that help a learning agent make robust decisions in complex, dynamic settings. These settings may include environments that evolve over time, or scenarios involving adversarial actions that attempt to manipulate outcomes. By leveraging stochastic optimization techniques, our work aims to improve decision-making processes in these challenging, real-world situations.

Selected Publications:

|

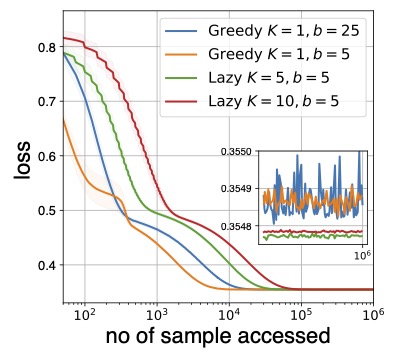

Qiang Li, Hoi-To Wai. Stochastic Optimization Schemes for Performative Prediction with Nonconvex Loss, 2024, (NeurIPS 2024). [arXiv link], [code], [poster], [slides] |

Topic 2: Large Scale (Multi-agent) Optimization

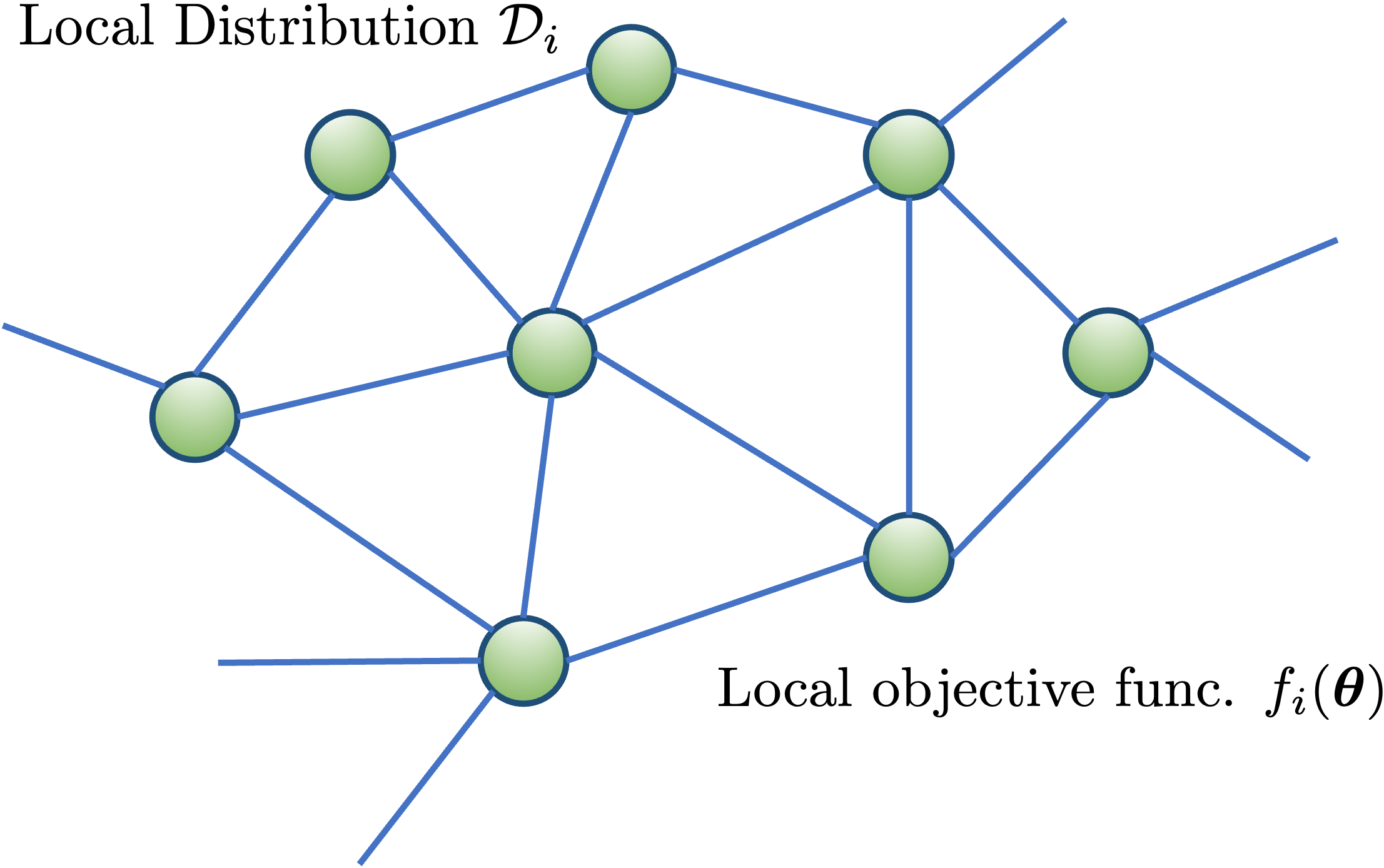

Goal: To design realistic algorithms for optimal solution-seeking in multi-agent optimization systems.

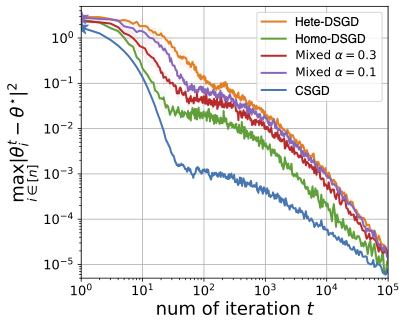

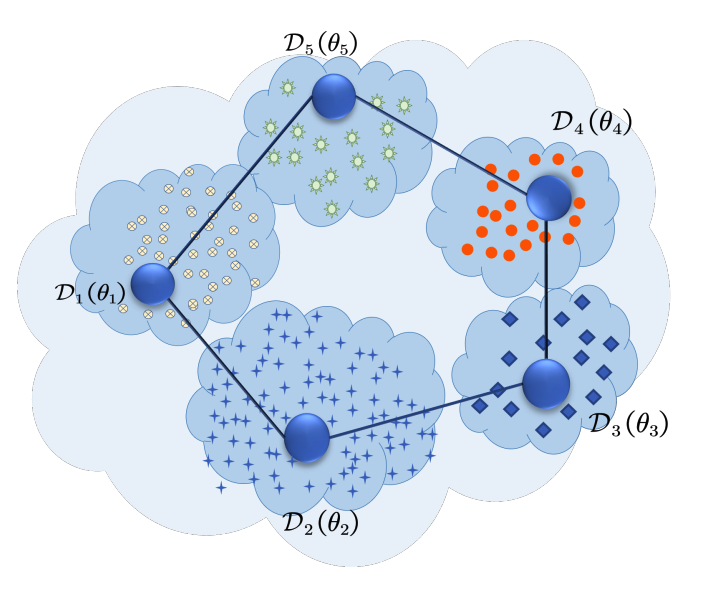

Background: In fields like wireless sensor networks, multi-agent reinforcement learning, and federated learning, decentralized optimization algorithms play a crucial role and have attracted significant attention. Widely used methods include gradient descent-based algorithms and gossip-based communication mechanisms, such as Decentralized SGD, Push-Sum, Gradient Tracking algorithms, and NEXT algorithms, which are designed to handle (un)directed and time-varying graphs.

How can we design decentralized algorithms for more complex environments, such as those with heterogeneous data (addressing out-of-distribution issues) or strategic data?

Motivated by these challenges, our work investigates ways to improve the performance of decentralized algorithms and adapt them to new problem setups, with rigorous convergence guarantees. This project aims to fully leverage computational resources from cloud centers, addressing key issues in distributed implementation and enhancing algorithmic efficiency for real-world applications.

Selected Publications:

|

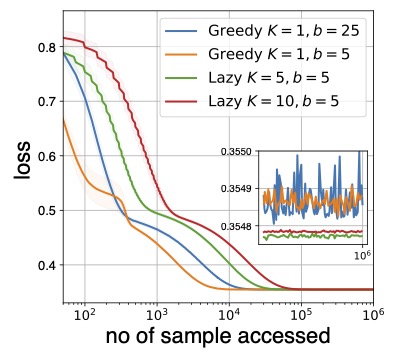

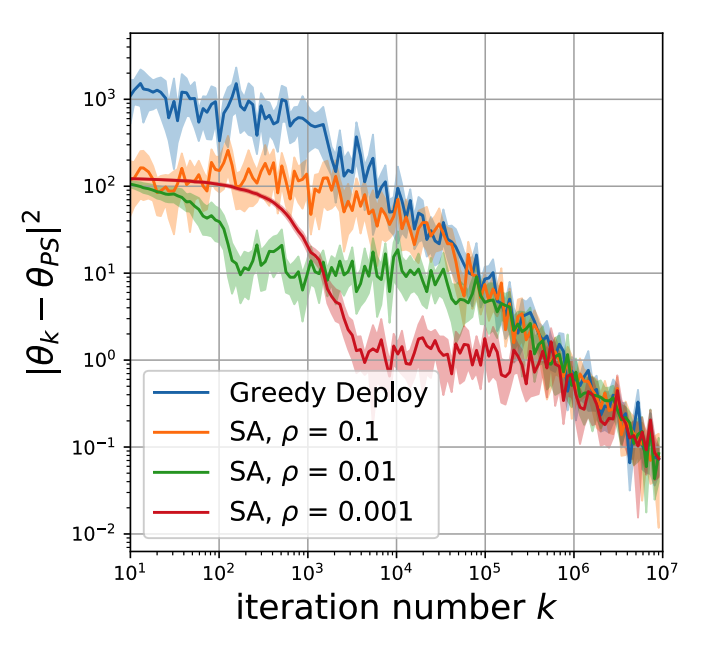

Qiang Li, Chung-Yiu Tau, Hoi-To Wai. Multi-agent Performative Prediction with Greedy Deployment and Consensus Seeking Agents, 36th Conference on Neural Information Processing Systems (NeurIPS 2022). [link] [slides] [video] [poster] |

Published Articles

Most of my publications should be indexed in my Google Scholar profile, and I will try to keep the most recent version of my publications here as well.

Graduae Research

|

Qiang Li, Michal Yemini, Hoi-To Wai. Asynchronous and Stochastic Distributed Resource Allocation, 2025, IEEE CDC 2025. [arXiv link]. |

|

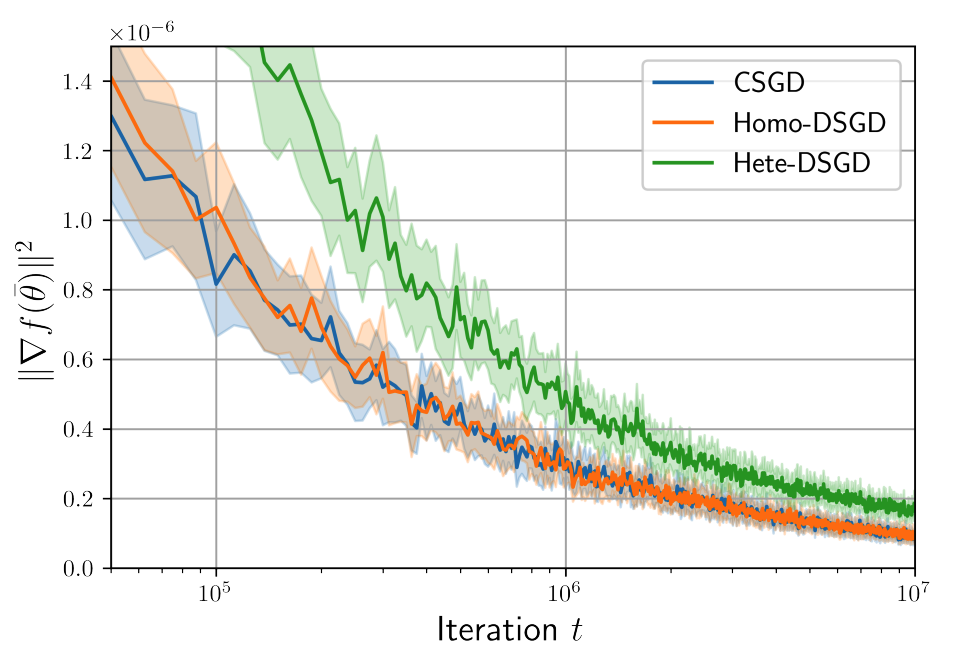

Qiang Li, Hoi-To Wai. Tighter Analysis for Decentralized Stochastic Gradient Method: Impact of Data Homogeneity, 2024, IEEE Transactions on Automatic Control (full paper). [arXiv link], [code] |

|

Qiang Li, Hoi-To Wai. Stochastic Optimization Schemes for Performative Prediction with Nonconvex Loss, 2024, (NeurIPS 2024). [arXiv link], [code] |

|

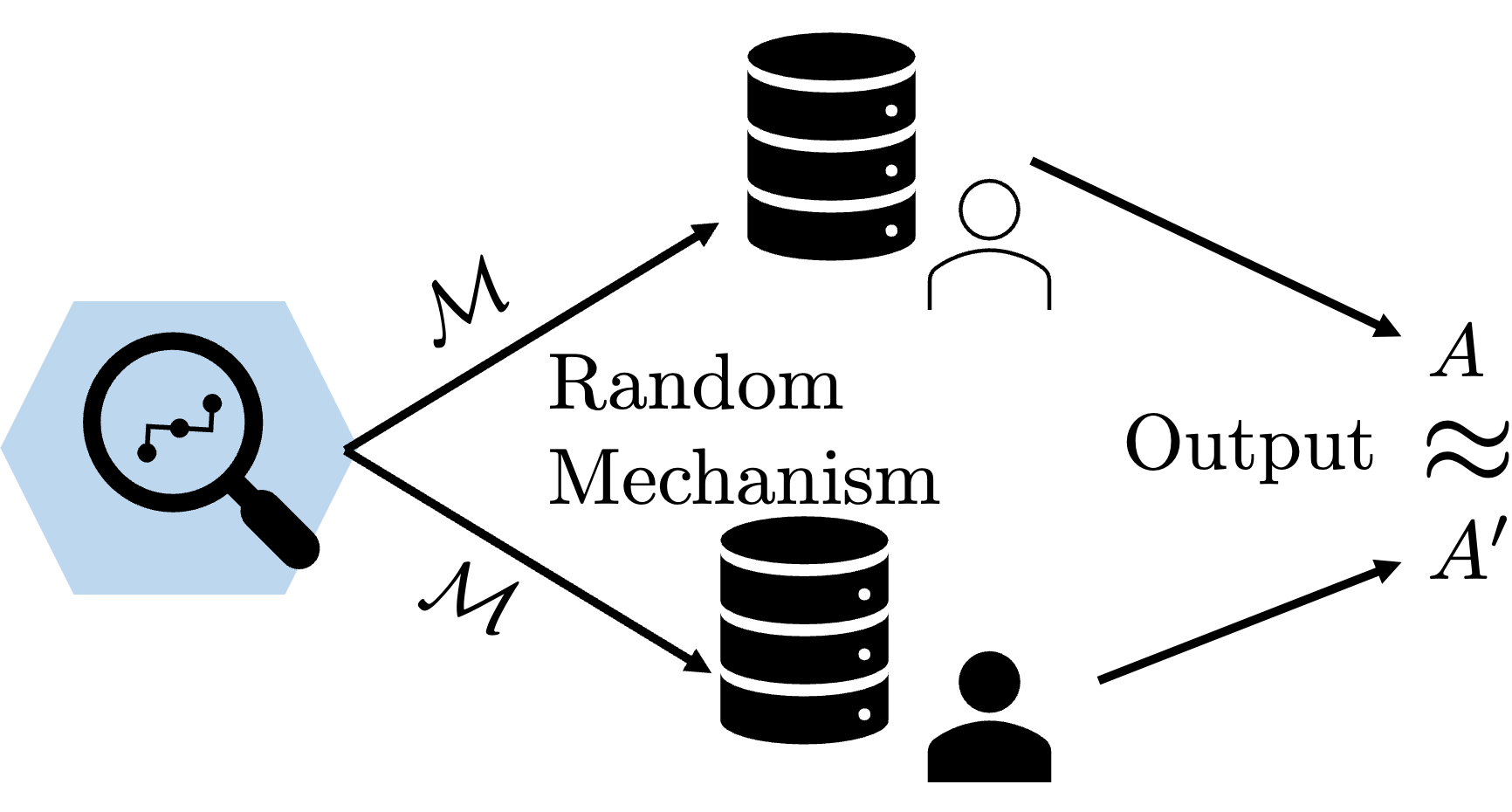

Qiang Li, Michal Yemini, Hoi-To Wai. Privacy-Efficacy Tradeoff of Clipped SGD with Decision-dependent Data, (ICML 2024) - Humans, Algorithmic Decision-Making and Society: Modeling Interactions and Impact Workshop. [link] [slides] [poster] [Paper Webpage] |

|

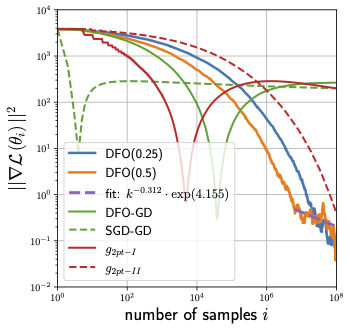

Haitong Liu, Qiang Li, Hoi-To Wai. Two-timescale Derivative Free Optimization for Performative Prediction with Markovian Data, 41st International Conference on Machine Learning (ICML 2024). [link] [poster] |

|

Qiang Li, Hoi-To Wai. On the role of Data Homogeneity in Multi-Agent Non-convex Stochastic Optimization, 2022 IEEE 61st Conference on Decision and Control, (IEEE CDC 2022). [link] [slides] |

|

Qiang Li, Chung-Yiu Tau, Hoi-To Wai. Multi-agent Performative Prediction with Greedy Deployment and Consensus Seeking Agents, 36th Conference on Neural Information Processing Systems (NeurIPS 2022). [link] [slides] [video] [poster] |

|

Qiang Li, Hoi-To Wai, State Dependent Performative Prediction with Stochastic Approximation, Society for Artificial Intelligence and Statistics (AiSTAT 2022). [link] [poster] |

Undergraduate Research

|

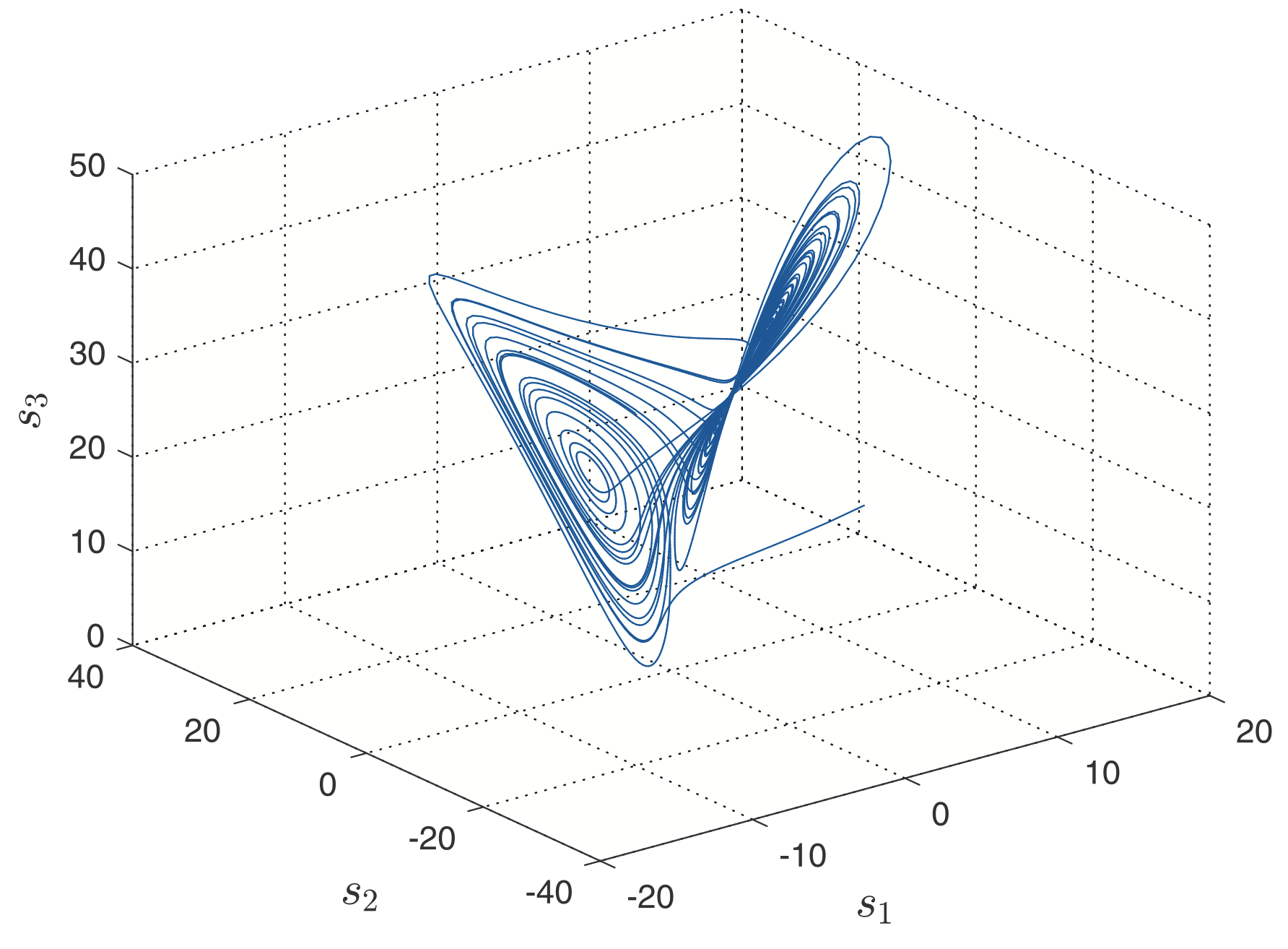

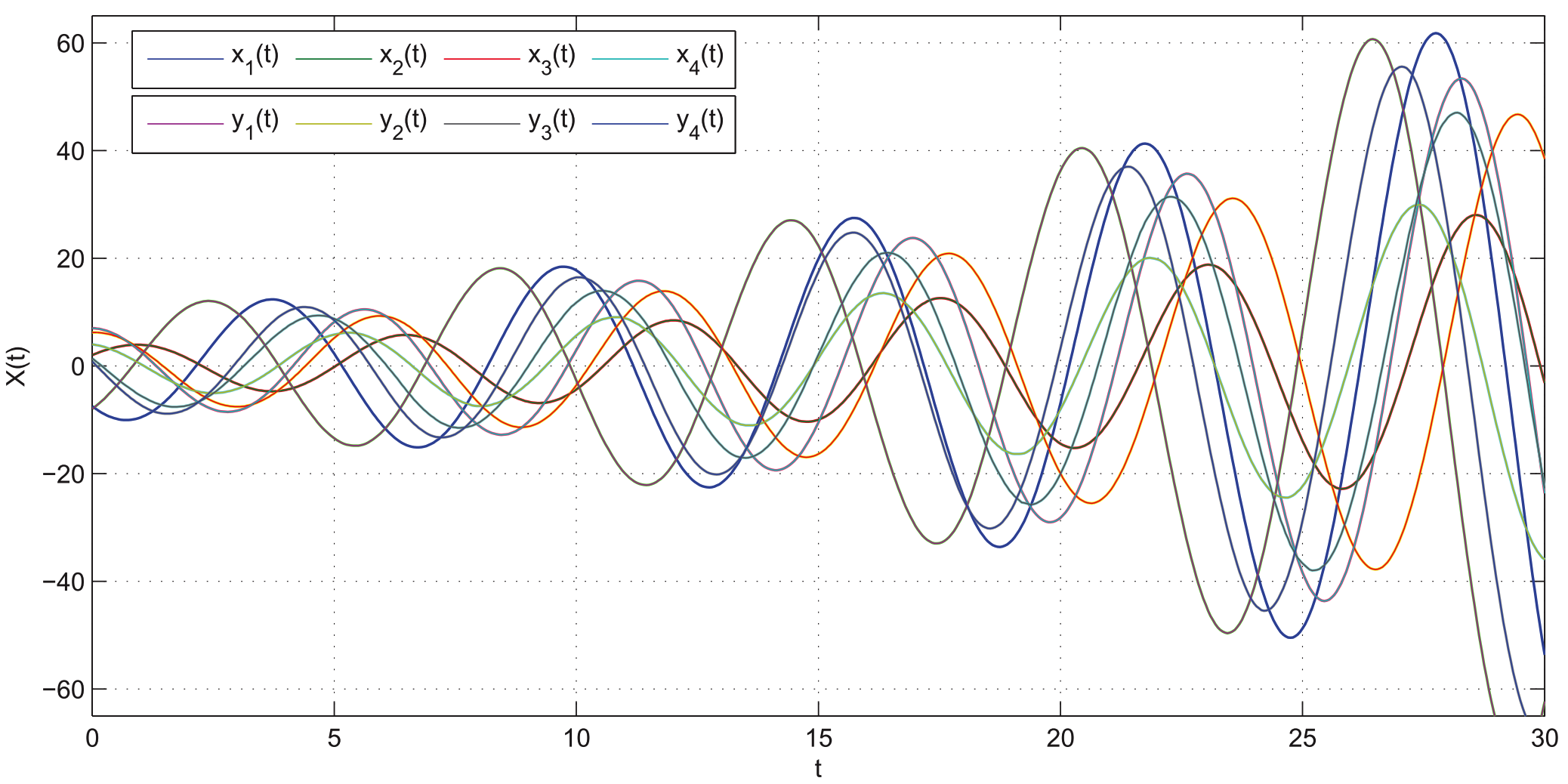

Yao XU, Qiang Li, Wenxue Li. Periodically intermittent discrete observation control for synchronization of fractional-order coupled systems, Communications in Nonlinear Science and Numerical Simulation 2019, (CNSNS 2019). [link] |

|

Yongbao WU, Qiang Li, Wenxue Li. Novel aperiodically intermittent stability criteria for Markovian switching stochastic delayed coupled systems, Chaos: An Interdisciplinary Journal of Nonlinear Science (Chaos 2018). [link] |

Professional Service

- Conference Reviewer:

- NeurIPS 2024;

- Neural Information Processing Systems (NeurIPS), 2021, 2022;

- International Conference on Machine Learning (ICML) 2023.

- Journal Reviewer:

- IEEE Transactions on Signal Processing (TSP), 2022;

- European Signal Processing Conference (EUSIPCO) 2022;